Greg Cordover

Thoughts & projects

Reverse Image Searching

Checking if files are identical is a trivial task. You could directly compare all their bytes or perhaps compute a hash of each file and compare those. Trying to compare the similarity of files’ content is an entirely more difficult task. What does similarity mean? How can a computer determine if two photos contain the same content after getting resized or compressed from being reposted on Reddit and Twitter?

Due to the lossy nature of most image compression algorithms, you cannot compare the bytes of images to determine if they have the same content. Every time a JPEG image is decoded and encoded additional data is changed or lost. While formats like PNG are lossless, resizing images will also change their content preventing direct comparisons.

There must be ways to get around this. Google Images, TinEye, and others allow you to reverse image search compressed or resized images. While I can’t speak to exactly how their searches work at such incredible scale, an accessible solution is called perceptual image hashing. Cryptographic hashing algorithms like the MD5 or SHA256 are designed to generate an unpredictable result. In order to do this, they are optimized to change as much as possible for similar inputs. Perceptual hashes are the opposite—they are optimized to change as little as possible for similar inputs.

Perceptual hashes allow you to calculate a value that represents the content of an image. You may then compare images by looking at the number of bits which differ between the input and the image you are comparing it against. This difference is known as the hamming distance.

Let’s take a look at an example. There’s also the playground at the end of this post to give it a try with your own images.

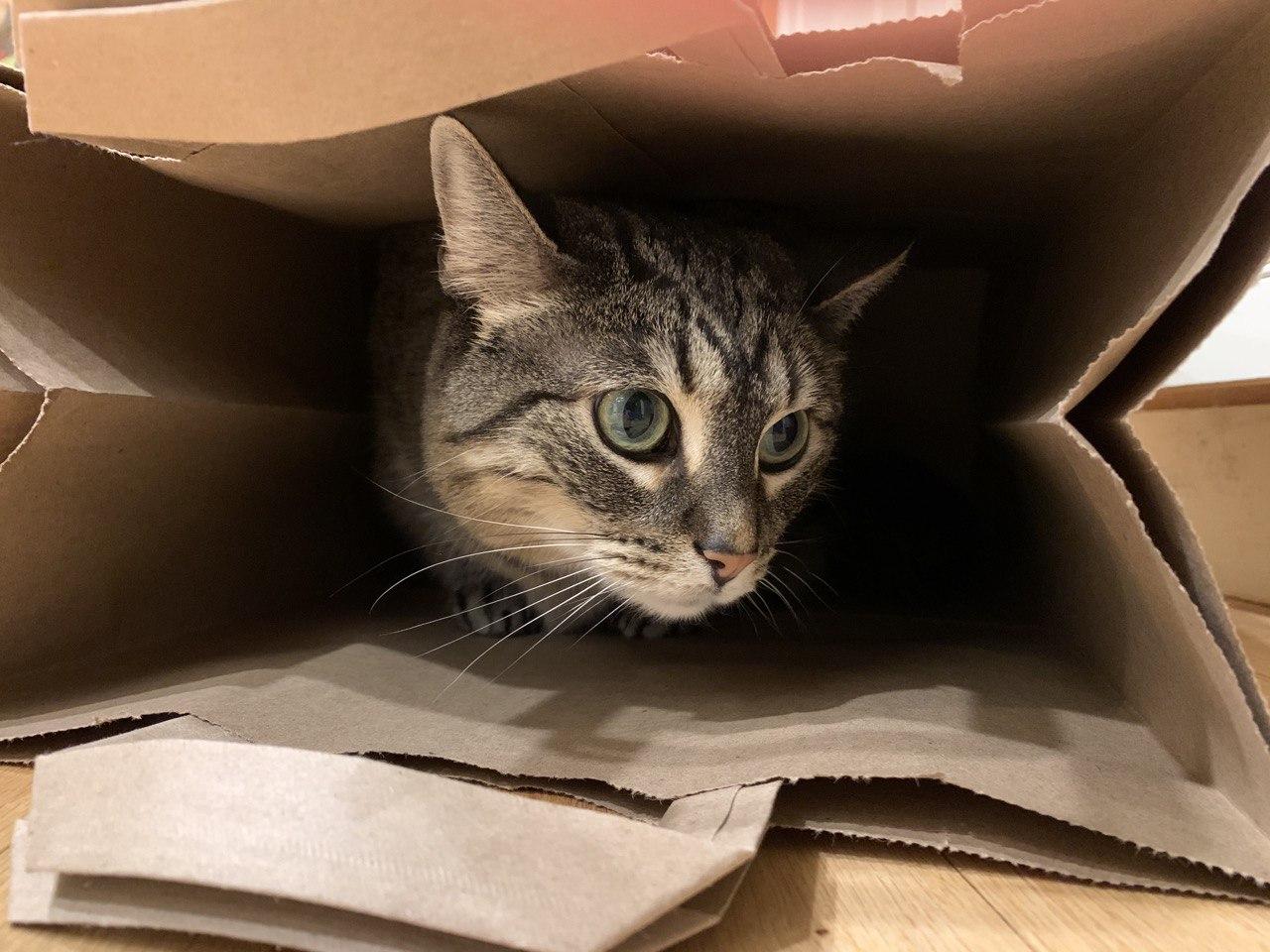

I sent this image to myself over a social media platform which resized and compressed it. The original image had a SHA1 hash of EE96ECC716127B557F65B75A4EB7B7C707689987. The received version had a hash of 1A184BCE4CB8901AA4881877D347405C4160C706. Due to the number of bits in these hashes they are shown in hexadecimal, you may mouse over them to show the full binary equivalent. These hashes have a hamming distance of 89, a huge variance for the 160 bits contained within a SHA1 hash. However the images still looks exactly the same, albeit lower quality. Would you say it’s the same image? I would.

Perceptual hashes tell an entirely different story. Let’s compare the two 64 bit perceptual hashes for my original image and the resized and compressed copy.

01110100100100101100110010001110101000101000100001001101101010100111010010010010110011001000011010100010100010000100110110101010

The SHA1 hashes had a hamming distance of more than 50% of its size. The perceptual hashes have a hamming distance of 1, a difference of less than 2%. In some cases, resized and compressed images can still have identical hashes.

A very rudimentary way of using this data would be to create a list of all the images we know about and their perceptual hash. A hamming distance can be calculated with just a xor and popcnt instruction on modern CPUs. This approach would be fairly fast to start with. With tens of thousands of images you could get results in a few seconds which is likely acceptable performance.

When you start growing to millions of images, sequentially scanning and comparing against every image takes far too long. Because the hash has to be computed against the input each time, you couldn’t use a conventional index. Thankfully, Burkhard and Keller suggested the BK-tree, a tree specifically for metric spaces like hamming distance. Instead of having to search against every image in the database, you can greatly reduce the space you need to evaluate. An excellent explanation and some examples of how they work can be found here.

There’s an implementation of a BK-tree index for PostgreSQL, allowing you to easily keep all of your data in sync and still highly performant to access. Some personal use suggests that this disk-backed tree performs extremely well for very similar lookups. Querying a database with over 15 million hashes with 1 bit of variance takes under 15ms. However, increasing the distance rapidly grows the time required for the search. Searching for everything with a variance of up to 5 bits required more than 1.5 seconds, over 100× slower. Unsurprisingly, maintaining the entire tree in RAM is much more performant and can be searched for much greater distances in less time at the expense of a great deal of RAM being consumed.

Trying to index and reverse image search millions of images has been a fascinating project and a great introduction to the practical usage of trees. Google and TinEye have a far superior index likely also using machine learning to understand and tag the content of images in addition to something like a scale-invariant feature transform which allows for more varied matching. Perhaps in a future project I’ll try implementing something similar but perceptual hashes already work incredibly well for my uses.

Playground

Here you can try uploading a few images to see one implementation of a perceptual hash convert your input to bits. The first image you upload will not be compared to anything. Each image you upload after that will be compared to the previous image with up to 5 image hashes visible at a time. Each compared hash will have different bit values highlighted, with a total count of differences at the end of the line.

Note that hashing images is CPU-intensive and may take a moment to complete.

All of these hashes are generated in browser thanks to the magic of Rust and WebAssembly.